BOP Challenge 2023

News about BOP Challenge 2023 (join the BOP Google group for all updates):

- 04/Apr/2024 - BOP Challenge 2023 report has been accepted to the Workshop on Computer Vision for Mixed Reality at CVPR 2024.

- 14/Mar/2024 - BOP Challenge 2023 report is on arXiv.

- 03/Oct/2023 - Winners of the BOP Challenge 2023 have been announced at the R6D workshop at ICCV 2023 (results, recording). Congratulations!

- 26/Sep/2023 - The submission deadline for BOP Challenge 2023 is extended to September 28, 9:00AM UTC.

- 07/Aug/2023 - The default detections/segmentations for Task 4 have been updated (results for some images were missing in the previous version).

- 07/Jul/2023 - A method for Task 4 can use default detections/segmentations (results of CNOS prepared by Van Nguyen Nguyen et al.).

- 06/Jul/2023 - Rule refinements: Submissions need to include the inference time (the time cannot be set to -1 as last years) and the methods need to be documented using a provided template (details). Definition of Tasks 4–6 was also refined: the object representation is required to be fixed after onboarding.

- 21/Jun/2023 - Definition of the onboarding input in Tasks 4–6 was refined.

- 15/Jun/2023 - Training dataset for Tasks 4–6 is now available.

- 07/Jun/2023 - BOP Challenge 2023 has been opened!

Results of the BOP Challenge 2023 are published in:

T. Hodaň,

M. Sundermeyer,

Y. Labbé,

V. N. Nguyen,

G. Wang,

E. Brachmann,

B. Drost,

V. Lepetit,

C. Rother,

J. Matas,

BOP Challenge 2023 on Detection, Segmentation and Pose Estimation of Seen and Unseen Rigid Objects, CVPRW 2024 (CV4MR workshop)

[PDF,

SLIDES,

VIDEO,

BIB]

1. Introduction

- 04/Apr/2024 - BOP Challenge 2023 report has been accepted to the Workshop on Computer Vision for Mixed Reality at CVPR 2024.

- 14/Mar/2024 - BOP Challenge 2023 report is on arXiv.

- 03/Oct/2023 - Winners of the BOP Challenge 2023 have been announced at the R6D workshop at ICCV 2023 (results, recording). Congratulations!

- 26/Sep/2023 - The submission deadline for BOP Challenge 2023 is extended to September 28, 9:00AM UTC.

- 07/Aug/2023 - The default detections/segmentations for Task 4 have been updated (results for some images were missing in the previous version).

- 07/Jul/2023 - A method for Task 4 can use default detections/segmentations (results of CNOS prepared by Van Nguyen Nguyen et al.).

- 06/Jul/2023 - Rule refinements: Submissions need to include the inference time (the time cannot be set to -1 as last years) and the methods need to be documented using a provided template (details). Definition of Tasks 4–6 was also refined: the object representation is required to be fixed after onboarding.

- 21/Jun/2023 - Definition of the onboarding input in Tasks 4–6 was refined.

- 15/Jun/2023 - Training dataset for Tasks 4–6 is now available.

- 07/Jun/2023 - BOP Challenge 2023 has been opened!

Results of the BOP Challenge 2023 are published in:

T. Hodaň,

M. Sundermeyer,

Y. Labbé,

V. N. Nguyen,

G. Wang,

E. Brachmann,

B. Drost,

V. Lepetit,

C. Rother,

J. Matas,

BOP Challenge 2023 on Detection, Segmentation and Pose Estimation of Seen and Unseen Rigid Objects, CVPRW 2024 (CV4MR workshop)

[PDF,

SLIDES,

VIDEO,

BIB]

To measure the progress in the field of object pose estimation, we created the BOP benchmark and have been organizing challenges on the benchmark datasets in conjunction with the R6D workshops since 2017. Year 2023 is no exception. The BOP benchmark is far from being solved, with the pose estimation accuracy improving significantly every challenge — the state of the art moved from 56.9 AR (Average Recall) in 2019, to 69.8 AR in 2020, and to new heights of 83.7 AR in 2022. Out of 49 pose estimation methods evaluated since 2019, the top 18 are from 2022. More details can be found in the BOP challenge 2022 paper.

Besides the three tasks from 2022 (object detection, segmentation and pose estimation of objects seen during training), the 2023 challenge introduces new tasks of detection, segmentation and pose estimation of objects unseen during training. In the new tasks, methods need to learn novel objects during a short object onboarding stage (max 5 min per object, 1 GPU) and then recognize the objects in images from diverse environments. Such methods are of a high practical relevance as they do not require expensive training for every new object, which is required by most existing methods and severely limits their scalability. This year, methods are provided 3D mesh models during the onboarding stage. Next years, we are planning to introduce an even more challenging variant where only a few reference images of each object will be provided. The introduction of these tasks is encouraged by the recent breakthroughs in foundation models and their impressive few-shot learning capabilities.

An implicit goal of BOP is to identify the best synthetic-to-real domain transfer techniques. The capability of methods to effectively train on synthetic images is crucial as collecting ground-truth object poses for real images is prohibitively expensive. In 2020, to foster the progress, we joined the development of BlenderProc, an open-source synthesis pipeline, and used it to generate photorealistic training images for the benchmark datasets. Methods trained on these images achieved major performance gains on real test images. However, we can still observe a performance drop due to the domain gap between the synthetic training and real test images. We therefore encourage participants to build on top of BlenderProc and publish their solutions.

2. Important dates

- Submission deadline for results: September 26, 2023 (11:59PM UTC) September 28, 2023 (9:00AM UTC).

- Presentation of awards: October 3, 2023 at the R6D workshop at ICCV 2023.

3. Tasks

In 2023, methods are competing on the following six tasks. Task 1 is the same since 2019, Tasks 2 and 3 are the same as in 2022, and Tasks 4, 5 and 6 are introduced in 2023. As in the previous years, only annotated object instances for which at least 10% of the projected surface area is visible are considered in the evaluation.

Task 1: Model-based 6D localization of seen objects (leaderboards) – defined as in 2019, 2020, 2022

Training input: At training time, a method is provided a set of RGB-D training images showing objects annotated with ground-truth 6D poses, and 3D mesh models of the objects (typically with a color texture). A 6D pose is defined by a matrix $\textbf{P} = [\mathbf{R} \, | \, \mathbf{t}]$, where $\mathbf{R}$ is a 3D rotation matrix, and $\mathbf{t}$ is a 3D translation vector. The matrix $\textbf{P}$ defines a rigid transformation from the 3D space of the object model to the 3D space of the camera.

Test input: At test time, the method is given an RGB-D image unseen during training and a list $L = [(o_1, n_1),$ $\dots,$ $(o_m, n_m)]$, where $n_i$ is the number of instances of an object $o_i$ that are visible in the image. The method can use default detections (results of GDRNPPDet_PBRReal, the best 2D detection method from 2022 for Task 2).

Test output: The method produces a list $E=[E_1,$$\dots,$$E_m]$, where $E_i$ is a list of $n_i$ pose estimates with confidences for instances of object $o_i$.

Evaluation methodology: The error of an estimated pose w.r.t. the ground-truth pose is calculated by three pose-error functions (see Section 2.2 in the BOP 2020 paper):

- VSD (Visible Surface Discrepancy) which treats indistinguishable poses as equivalent by considering only the visible object part.

- MSSD (Maximum Symmetry-Aware Surface Distance) which considers a set of pre-identified global object symmetries and measures the surface deviation in 3D.

- MSPD (Maximum Symmetry-Aware Projection Distance) which considers the object symmetries and measures the perceivable deviation.

Task 2: Model-based 2D detection of seen objects (leaderboards) – defined as in 2022

Training input: At training time, a method is provided a set of training images showing objects that are annotated with ground-truth 2D bounding boxes. The boxes are amodal, i.e., covering the whole object silhouette including the occluded parts. The method can also use 3D mesh models that are available for the objects.

Test input: At test time, the method is given an RGB-D image unseen during training that shows an arbitrary number of instances of an arbitrary number of objects, with all objects being from one specified dataset (e.g. YCB-V). No prior information about the visible object instances is provided.

Test output: The method produces a list of object detections with confidences, with each detection defined by an amodal 2D bounding boxes.

Evaluation methodology: Following the evaluation methodology from the COCO 2020 Object Detection Challenge, the detection accuracy is measured by the Average Precision (AP). Specifically, a per-object $\text{AP}_O$ score is calculated by averaging the precision at multiple Intersection over Union (IoU) thresholds: $[0.5, 0.55, \dots , 0.95]$. The accuracy of a method on a dataset $D$ is measured by $\text{AP}_D$ calculated by averaging per-object $\text{AP}_O$ scores, and the overall accuracy on the core datasets is measured by $\text{AP}_C$ defined as the average of the per-dataset $\text{AP}_D$ scores. Correct predictions for annotated object instances that are visible from less than 10% (and not considered in the evaluation) are filtered out and not counted as false positives. Up to 100 predictions per image (with the highest confidence scores) are considered.

Task 3: Model-based 2D segmentation of seen objects (leaderboards) – defined as in 2022

Training input: At training time, a method is provided a set of training images showing objects that are annotated with ground-truth 2D binary masks. The masks are modal, i.e., covering only the visible object part. The method can also use 3D mesh models that are available for the objects.

Test input: At test time, the method is given an RGB-D image unseen during training that shows an arbitrary number of instances of an arbitrary number of objects, with all objects being from one specified dataset (e.g. YCB-V). No prior information about the visible object instances is provided.

Test output: The method produces a list of object segmentations with confidences, with each segmentation defined by a modal 2D binary mask.

Evaluation methodology: As in Task 2, with the only difference being that IoU is calculated on binary masks instead of bounding boxes.

Task 4: Model-based 6D localization of unseen objects (leaderboards) – introduced in 2023

Training input: At training time, a method is provided a set of RGB-D training images showing training objects annotated with ground-truth 6D poses, and 3D mesh models of the objects (typically with a color texture). The 6D object pose is defined as in Task 1. The method can use 3D mesh models that are available for the training objects.

Object-onboarding input: The method is provided 3D mesh models of test objects that were not seen during training. To onboard each object (e.g. to render images/templates or fine-tune a neural network), the method can spend up to 5 minutes of the wall-clock time on a single computer with up to one GPU. The time is measured from the point right after the raw data (e.g. 3D mesh models) is loaded to the point when the object is onboarded. The method can use a subset of the BlenderProc images (links "PBR-BlenderProc4BOP training images") originally provided for Tasks 1–3 – the method can use as many images from this set as could be rendered within the limited onboarding time (consider that rendering of one image takes 2 seconds; rendering and any additional processing need to fit within 5 minutes). The method can also render custom images/templates but cannot use any real images of the object in the onboarding stage. The object representation (which may be given by a set of templates, an ML model, etc.) needs to be fixed after onboarding (it cannot be updated on test images).

Test input: At test time, the method is given an RGB-D image unseen during training and a list $L = [(o_1, n_1),$ $\dots,$ $(o_m, n_m)]$, where $n_i$ is the number of instances of a test object $o_i$ that are visible in the image. The method can use default detections/segmentations (results of CNOS prepared by Van Nguyen Nguyen et al. for the 2023 challenge).

Test output: As in Task 1.

Evaluation methodology: As in Task 1.

Task 5: Model-based 2D detection of unseen objects (leaderboards) – introduced in 2023

Training input: At training time, a method is provided a set of RGB-D training images showing training objects that are annotated with ground-truth 2D bounding boxes. The boxes are amodal, i.e., covering the whole object silhouette including the occluded parts. The method can also use 3D mesh models that are available for the training objects.

Object-onboarding input: As in Task 4.

Test input: At test time, the method is given an RGB-D image unseen during training that shows an arbitrary number of instances of an arbitrary number of test objects, with all objects being from one specified dataset (e.g. YCB-V). No prior information about the visible object instances is provided.

Test output: As in Task 2.

Evaluation methodology: As in Task 2.

Task 6: Model-based 2D segmentation of unseen objects (leaderboards) – introduced in 2023

Training input: At training time, a method is provided a set of RGB-D training images showing training objects that are annotated with ground-truth 2D binary masks. The masks are modal, i.e., covering only the visible object part. The method can also use 3D mesh models that are available for the objects.

Object-onboarding input: As in Task 4.

Test input: As in Task 5.

Test output: As in Task 3.

Evaluation methodology: As in Task 3.

4. Datasets

Methods can be evaluated on any of the BOP datasets, but are encouraged to be evaluated at least on the following seven core datasets in order to qualify for the main awards.

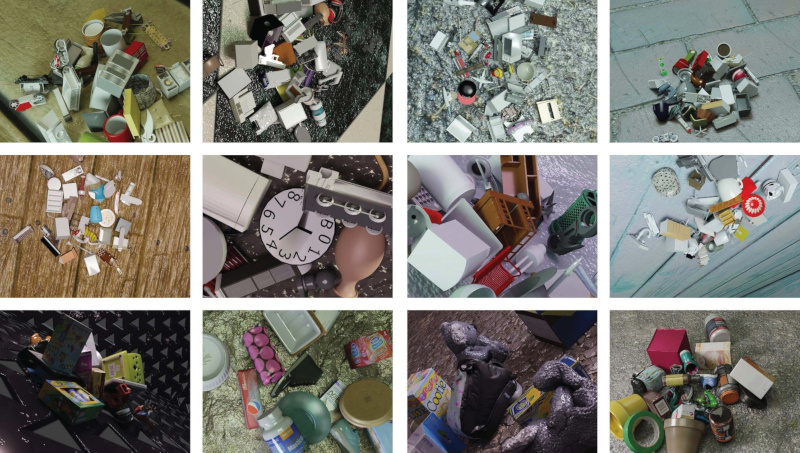

Training dataset for Tasks 4–6: We provide over 2M images showing more than 50K diverse objects (examples below). The images were originally synthesized for MegaPose using BlenderProc. The objects are from the Google Scanned Objects and ShapeNetCore datasets. Note that symmetry transformations are not available for these objects, but could be identified using these HALCON scripts (we used the scripts to identify symmetries of objects in the BOP datasets as described in Section 2.3 of the BOP Challenge 2020 paper; if you use the scripts to identify symmetries of the Google Scanned Objects and ShapeNetCore objects, sharing the symmetries would be appreciated).

5. Awards

Awards for 6D localization methods on Task 1:

- The Overall Best Method – The top method on the core datasets.

- The Best RGB-Only Method – The top RGB-only method on the core datasets.

- The Best Fast Method – The top method on the core datasets with the average running time per image below 1s.

- The Best BlenderProc-Trained Method – The top method on the core datasets trained only with the provided BlenderProc images (links "PBR-BlenderProc4BOP training images").

- The Best Single-Model Method – The top method on the core datasets which uses a single ML model per dataset.

- The Best Open-Source Method – The top method on the core datasets whose source code is publicly available.

- The Best Method Using Default Detections – The top method on the core datasets that relies on default detections for Task 1.

- The Best Method on Dataset D – The top method on each of the available datasets (does not need to be a core dataset).

Awards for 6D localization methods on Task 4:

- The Overall Best Method – The top method on the core datasets.

- The Best RGB-Only Method – The top RGB-only method on the core datasets.

- The Best Fast Method – The top method on the core datasets with the average running time per image below 1s.

- The Best BlenderProc-Trained Method – The top method on the core datasets trained only with the provided BlenderProc images from MegaPose.

- The Best Single-Model Method – The top method on the core datasets which uses a single ML model for all of the core datasets.

- The Best Open-Source Method – The top method on the core datasets whose source code is publicly available.

- The Best Method Using Default Detections/Segmentations – The top method on the core datasets that relies on default detections/segmentations for Task 4.

- The Best Method on Dataset D – The top method on each of the available datasets (does not need to be a core dataset).

Awards for 2D object detection methods (each award is given twice, once for Task 2 and once for Task 5):

- The Overall Best Detection Method – The top object detection method on the core datasets.

- The Best BlenderProc-Trained Detection Method – The top object detection method on the core datasets trained only with the provided BlenderProc images.

Awards for 2D object segmentation methods (each award is given twice, once for Task 3 and once for Task 6):

- The Overall Best Segmentation Method – The top object detection method on the core datasets.

- The Best BlenderProc-Trained Segmentation Method – The top object segmentation method on the core datasets trained only with the provided BlenderProc images.

6. How to participate

For the 6D localization tasks (Tasks 1 and 4), please follow instructions from BOP Challenge 2019. For the 2D detection/segmentation tasks (Tasks 2, 3, 5 and 6), please follow instructions from BOP Challenge 2022. The only difference in 2023 is that the inference time needs to be provided (it cannot be set to -1 as last years).

Results for all tasks should be submitted via this form. The online evaluation system uses script eval_bop19_pose.py to evaluate 6D localization results and script eval_bop22_coco.py to evaluate 2D detection/segmentation results.

IMPORTANT: When creating a method, please document your method in the "Description" field using the this template:

*Submitted to:* BOP Challenge 2023

*Training data:*

Type of images (e.g. real, provided PBR, custom synthetic, real + provided PBR), etc.

*Onboarding data:*

Only for Tasks 4-6; type of images/templates, etc.

*Used 3D models:*

Default, CAD or reconstructed for T-LESS, etc.

*Notes:*

Values of important method parameters, used 2D detection/segmentation method, details

about the object representation built during onboarding, etc.

6.3 Terms & conditions

- To be considered for the awards and for inclusion in a publication about the challenge, the authors need to provide documentation of the method (including specifications of the used computer) through the online submission form.

- The winners need to present their methods at the awards reception.

- After the submitted results are evaluated (by the online evaluation system), the authors can decide whether to make the scores visible to the public.

7. Organizers

Tomáš Hodaň, Reality Labs at Meta

Martin Sundermeyer, Google

Yann Labbé, Reality Labs at Meta

Van Nguyen Nguyen, ENPC ParisTech

Gu Wang, Tsinghua University

Eric Brachmann, Niantic

Bertram Drost, MVTec

Vincent Lepetit, ENPC ParisTech

Carsten Rother, Heidelberg University

Jiří Matas, Czech Technical University in Prague