BOP Challenge 2024

The sixth BOP challenge, focused on model-free/model-based 2D/6D object detection, and introducing three new datasets – HOT3D, HOPEv2, and HANDAL.

- 29/May/2024 - BOP Challenge 2024 has been opened!

1. Introduction

To measure the progress in 6D object pose estimation and related tasks, we created the BOP benchmark in 2017 and have been organizing challenges on the benchmark datasets together with the R6D workshops since then. The field has come a long way, with the accuracy in model-based 6D localization of seen objects improving by more than 50% (from 56.9 to 85.6 AR). In 2023, as the accuracy in this classical task had been slowly saturating, we introduced a more practical yet more challenging task of model-based 6D localization of unseen objects, where new objects need to be onboarded just from their CAD models in max 5 min on 1 GPU. Notably, the best 2023 method for unseen objects achieved the same level of accuracy as the best 2020 method for seen objects, while its run time awaits improvements. Besides 6D object localization, we have been also evaluating 2D object detection and 2D object segmentation. See the BOP 2023 paper for details.

In 2024, we introduce a new model-free variant of all tasks, define a new 6D object detection task, and introduce three new publicly available datasets.

1.1 New model-free tasks

While the model-based tasks are relevant for warehouse or factory settings, where CAD models of the target objects are often available, its applicability is limited in open-world scenarios. In 2024, we aim to bridge this gap by introducing new model-free tasks, where CAD models are not available and methods need to rapidly learn new objects just from reference video(s). Methods that can operate in the model-free setup will minimize on-boarding burden of new objects and unlock new types of applications, including augmented-reality systems capable of prompt object indexing and re-identification.

In the model-free tasks, a new object needs to be onboarded in max 5 min on 1 GPU from reference videos showing all possible views at the object. We define two types of video-based onboarding:

Static onboarding: The object is static (standing on a desk) and the camera is moving around the object and capturing all possible object views. Two videos are available, one with the object standing upright and one with the object standing upside-down. Object masks and 6D object poses are available for all video frames, which may be useful for 3D object reconstruction using methods such as NeRF or Gaussian Splatting.

Dynamic onboarding: The object is manipulated by hands and the camera is either static (on a tripod) or dynamic (on a head-mounted device). Object masks for all video frames and the 6D object pose for the first frame are available, which may be useful for 3D object reconstruction using methods such as BundleSDF or Hampali et al. The dynamic onboarding setup is more challenging but more natural for AR/VR applications.

1.2 New 6D object detection task

Since the beginning of BOP, we distinguish two object pose estimation tasks: 6D object localization, where identifiers of present object instances are provided for each test image, and 6D object detection, where no prior information is provided. Up until now, we have been evaluating methods for object pose estimation only on 6D object localization. We focused on this task because (1) pose accuracy on this simpler task had not been saturated, and (2) evaluating this task requires only calculating the recall rate which is noticeably less expensive than calculating the precision/recall curve required for evaluating 6D detection (see Appendix A.1 of the BOP 2020 paper for details).

This year, we are happy to bring the option of evaluating also on the 6D object detection task. This is possible thanks to new GPUs that we secured for the BOP evaluation system, run-time improvements in the BOP toolkit (multiprocessing and metrics implemented in PyTorch), and a simpler evaluation methodology – only MSSD and MSPD metrics are used for 6D detection, not VSD (see Section 2.2 of the BOP 2020 paper for definition of the metrics). The VSD metric is more expensive to calculate and requires depth images. Besides speeding up the evaluation, omitting VSD thus enables us to evaluate on RGB-only datasets.

1.3 New datasets

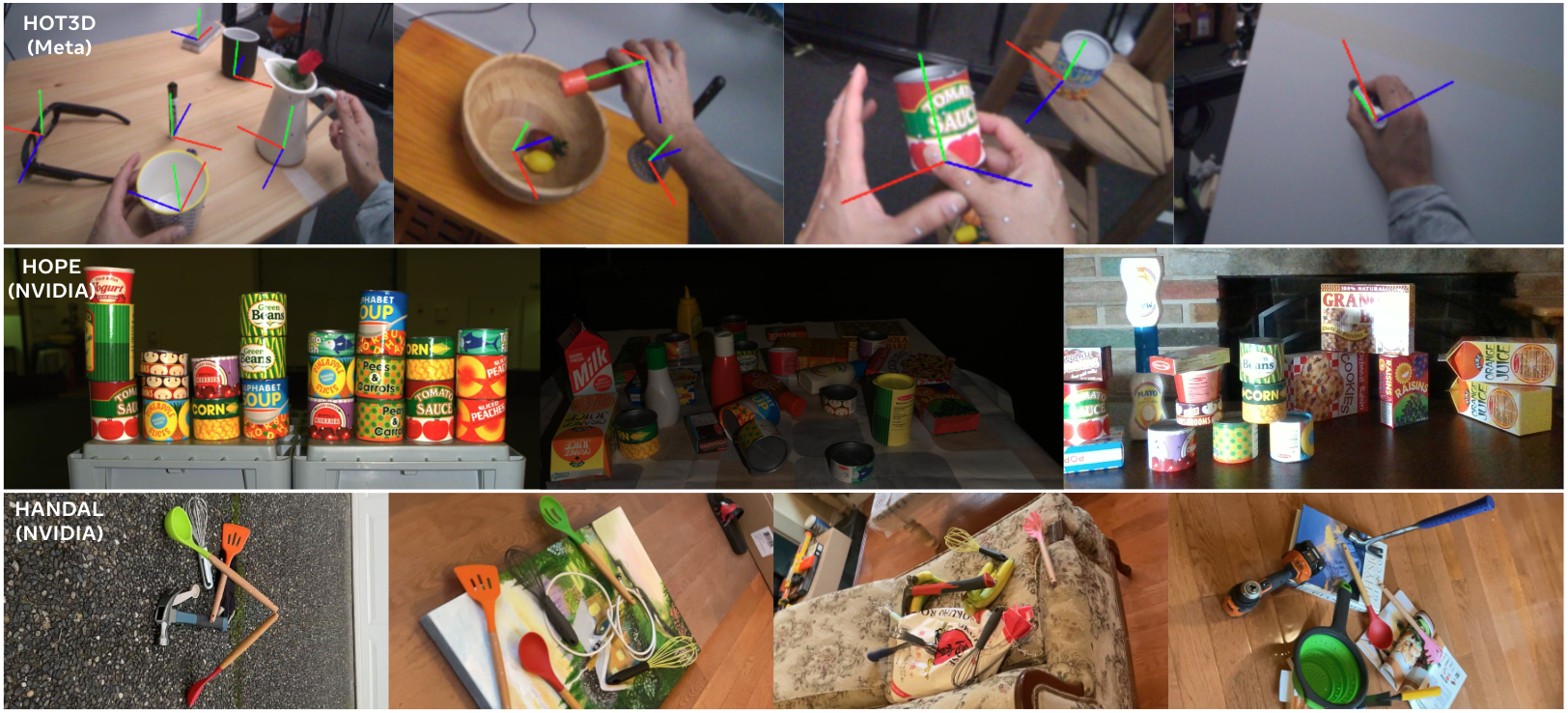

In 2024, we also introduce three new datasets which enable evaluation of the model-based, and model-free task variants. All these datasets include both CAD models and onboarding videos:

HOT3D (coming soon): A dataset for egocentric hand and object tracking in 3D. The dataset offers multi-view RGB and monochrome image streams showing participants interacting with 33 diverse rigid objects. Besides a simple inspection scenario, where participants pick up, observe, and put down the objects, the recordings show scenarios resembling typical actions in the kitchen, office, and living room. The dataset is recorded by two recent head-mounted devices from Meta: Project Aria, which is a research prototype of light-weight AI glasses, and Quest 3, which is a production VR headset that has been sold in millions of units. The images are annotated with high-quality ground-truth 3D poses and shapes of hands and objects. The ground-truth poses were obtained by a professional motion-capture system using small optical markers, which were attached on hands and objects and tracked by multiple infrared cameras. Hands are represented by the standard MANO model and objects by 3D mesh models with PBR materials obtained by an in-house scanner. HOT3D is also used for the HANDS Challenge 2024 (website TBD) and can be thus used for evaluating methods for hand and object pose estimation (hand and object poses can be submitted to the HANDS and BOP evaluation servers respectively).

HOPEv2 (Tyree et al. IROS 2022): A dataset for robotic manipulation composed of 28 toy grocery objects, available from online retailers for about 50 USD. The original HOPE dataset was captured as 50 cluttered, single-view scenes in household/office environments, each with up to 5 lighting variations including backlighting and angled direct lighting with cast shadows. For the BOP Challenge 2024, we release an updated version of HOPE with additional testing images collected from 7 cluttered scenes, each captured from multiple views by a moving camera.

HANDAL (Guo et al. IROS 2023): A dataset with graspable or manipulable objects, such as hammers, ladles, cups, and power drills. Objects are captured from multiple views in cluttered scenes. Similar to HOPE, we have captured additional testing images for BOP challenge 2024. While the original dataset has 212 objects of 17 categories, we only consider 40 objects of 7 categories, each with high-quality CAD models created by 3D artists.

2. Important dates

- Submission deadline for results: September 18, 2024 (11:59PM UTC).

- Presentation of awards: September 29, 2024 at the R6D workshop at ECCV 2024.

3. Challenge tracks

Challenge tracks on BOP-Classic-Core datasets:

- Track 1: Model-based 6D localization of unseen objects on BOP-Classic-Core

- Track 2: Model-based 6D detection of unseen objects on BOP-Classic-Core

- Track 3: Model-based 2D detection of unseen objects on BOP-Classic-Core

Challenge tracks on BOP-H3 core datasets:

- Track 4: Model-based 6D detection of unseen objects on BOP-H3

- Track 5: Model-based 2D detection of unseen objects on BOP-H3

- Track 6: Model-free 6D detection of unseen objects on BOP-H3

- Track 7: Model-free 2D detection of unseen objects on BOP-H3

Besides tracks on 6D and 2D detection, we also have a track (Track 1) on model-based 6D localization of unseen objects, which is a task introduced in 2023 that is still enjoying an active leaderboard. Note that leaderboards for all tasks stay open. However, only results from the tracks defined above will be recognized in the 2024 challenge.

4. Awards

Awards for all tracks:

- The Overall Best Method – The top method on the core datasets.

- The Best Fast Method – The top method on the core datasets with the average running time per image below 1s.

- The Best Open-Source Method – The top method on the core datasets whose source code is publicly available.

Extra awards for tracks on BOP-Classic-Core datasets:

- The Best RGB-Only Method – The top RGB-only method on the core datasets.

Extra awards for tracks on 6D localization/detection:

- The Best Method Using Default Detections/Segmentations – The top method that relies on provided default detections/segmentations.

5. How to participate

For tracks on the 6D localization and 6D detection tasks (Tracks 1, 2, 4, 6), please follow instructions from BOP Challenge 2019. For tracks on the 2D detection tasks (Tracks 3, 5, 7), please follow instructions from BOP Challenge 2022.

Results for all tracks should be submitted via this form. The online evaluation system uses script eval_bop19_pose.py to evaluate 6D localization results, script eval_bop24_pose.py to evaluate 6D detection results, and script eval_bop22_coco.py to evaluate 2D detection/segmentation results.

We will also share more details on how to evaluate on HOT3D and HANDAL.

IMPORTANT: When creating a method, please document your method in the "Description" field using the this template:

*Submitted to:* BOP Challenge 2024

*Training data:*

Type of images (e.g. real, provided PBR, custom synthetic, real + provided PBR), etc.

*Onboarding data:*

Only for Tracks 6 and 7; type of onboarding (static, dynamic, model-based), etc.

*Used 3D models:*

Default, CAD or reconstructed for T-LESS, etc.

*Notes:*

Values of important method parameters, used 2D detection/segmentation method, details

about the object representation built during onboarding, etc.

Terms & conditions

- To be considered for the awards and for inclusion in a publication about the challenge, the authors need to provide their full names and documentation of the method (including specifications of the used computer) through the online submission form.

- The winners need to present their methods at the awards reception.

- After the submitted results are evaluated (by the online evaluation system), the authors can decide whether to make the scores visible to the public.

6. Organizers

Van Nguyen Nguyen, ENPC ParisTech

Stephen Tyree, NVIDIA

Andrew Guo, University of Toronto, NVIDIA

Médéric Fourmy, Czech Technical University in Prague

Anas Gouda, TU Dortmund

Lukas Ranftl, MVTec

Jonathan Tremblay, NVIDIA

Eric Brachmann, Niantic

Bertram Drost, MVTec

Vincent Lepetit, ENPC ParisTech

Carsten Rother, Heidelberg University

Stan Birchfield, NVIDIA

Jiří Matas, Czech Technical University in Prague

Yann Labbé, Reality Labs at Meta

Martin Sundermeyer, Google

Tomáš Hodaň, Reality Labs at Meta