Datasets

Datasets are split into groups which may differ in the type of included data and in tasks that can be evaluated on the datasets:

| Group | Datasets | Content | Supported tasks |

|---|---|---|---|

| BOP-Classic |

BOP-Classic-Core: LM-O, T-LESS,

ITODD, HB, YCB-V, IC-BIN, TUD-L

(seven datasets used for BOP challenges since 2019)

BOP-Classic-Extra: LM, HOPEv1, RU-APC, IC-MI, TYO-L |

|

|

| BOP-H3 |

HOT3D (coming soon) HOPEv2 HANDAL (coming soon) |

|

Format and download instructions

The datasets are provided on BOP HuggingFace Hub and in the BOP format. The BOP toolkit expects all datasets to be stored in the same folder, each dataset in a subfolder named with the base name of the dataset (e.g. "lm", "lmo", "tless"). The example below shows how to download and unpack one of the datasets (LM) from bash (names of archives with the other datasets can be seen in the download links below):

export SRC=https://huggingface.co/datasets/bop-benchmark/datasets/resolve/main

wget $SRC/lm/lm_base.zip # Base archive with dataset info, camera parameters, etc.

wget $SRC/lm/lm_models.zip # 3D object models.

wget $SRC/lm/lm_test_all.zip # All test images ("_bop19" for a subset used in the BOP Challenge 2019/2020).

wget $SRC/lm/lm_train_pbr.zip # PBR training images (rendered with BlenderProc4BOP).

unzip lm_base.zip # Contains folder "lm".

unzip lm_models.zip -d lm # Unpacks to "lm".

unzip lm_test_all.zip -d lm # Unpacks to "lm".

unzip lm_train_pbr.zip -d lm # Unpacks to "lm".

The datasets can be also downloaded using the HuggingFace CLI and unpacked using extract_bop.sh (more options are available at bop-benchmark):

pip install -U "huggingface_hub[cli]" export LOCAL_DIR=./datasets/ export NAME=lm huggingface-cli download bop-benchmark/datasets --include "$NAME/*" --local-dir $LOCAL_DIR --repo-type=dataset bash extract_bop.sh

MegaPose training dataset for Tasks on unseen objects of the 2023 and 2024 challenges.

HOT3D

Banerjee et al.: Introducing HOT3D: An Egocentric Dataset for 3D Hand and Object Tracking, paper, project website, license agreement.

HOT3D is a dataset for egocentric hand and object tracking in 3D. The dataset offers multi-view, RGB/monochrome, fisheye image streams showing 19 subjects interacting with 33 diverse rigid objects. The dataset also offers comprehensive ground-truth annotations including 3D poses of objects, hands, and cameras, and 3D models of hands and objects. In addition to simple pick-up/observe/put-down actions, HOT3D contains scenarios resembling typical actions in a kitchen, office, and living room environment. The dataset is recorded by two head-mounted devices from Meta: Project Aria, a research prototype of light-weight AR/AI glasses, and Quest 3, a production VR headset sold in millions of units. Ground-truth poses were obtained by a professional motion-capture system using small optical markers attached to hands and objects. Hand annotations are provided in the UmeTrack and MANO formats and objects are represented by 3D meshes with PBR materials obtained by an in-house scanner. Recordings from Aria also include the eye gaze signal and scene point clouds.

In BOP, we use HOT3D-Clips, which is a curated subset of HOT3D. Each clip has 150 frames (5 seconds) which are all annotated with ground-truth poses of all modeled objects and hands and which passed visual inspection. There are 4117 clips in total, 2969 clips extracted from the training split and 1148 from the test split of HOT3D (the ground-truth object poses are publicly available only for the training split). The HOT3D-Clips subset is also used in the Multiview Egocentric Hand Tracking Challenge.

See the HOT3D whitepaper for details and the HOT3D toolkit for an example on how to use HOT3D-Clips (how to load, potentially undistort the fisheye images, render using the fisheye cameras, etc.).

HOPE (NVIDIA Household Objects for Pose Estimation)

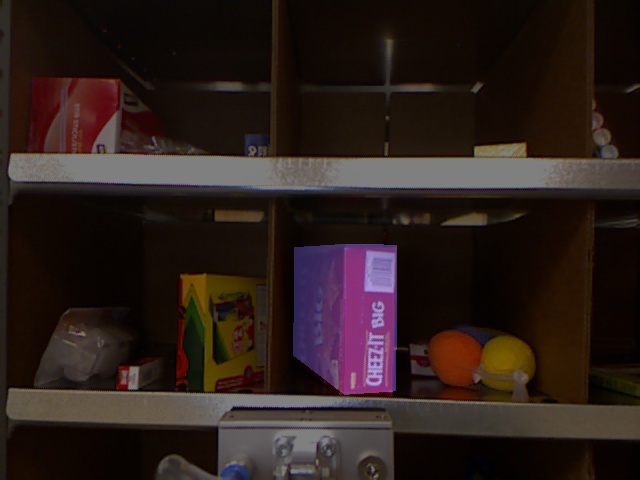

Tyree et al.: 6-DoF Pose Estimation of Household Objects for Robotic Manipulation: An Accessible Dataset and Benchmark, IROS 2022, project website, license: CC BY-SA 4.0.

28 toy grocery objects are captured in 50 scenes from 10 household/office environments. Up to 5 lighting variations are captured for each scene, including backlighting and angled direct lighting with cast shadows. Scenes are cluttered with varying levels of occlusion. The collection of toy objects is available from online retailers for about 50 USD (see "dataset_info.md" in the base archive for details).

We split the BlenderProc training images into three parts hope_train_pbr.zip (Part 1), hope_train_pbr.z01 (Part 2), hope_train_pbr.z02 (Part 3). Once downloaded, these files can be unzipped directly using “7z x hope_train_pbr.zip” or re-merged together using“zip -s0 hope_train_pbr.zip --out hope_train_pbr_all.zip”.

For the BOP Challenge 2024, we release an updated version of the dataset, called

HANDAL (Coming soon)

Guo et al.: A Dataset of Real-World Manipulable Object Categories with Pose Annotations, Affordances, and Reconstructions, IROS 2023 project website, license: CC BY-SA 4.0.

A dataset with graspable or manipulable objects, such as hammers, ladles, cups, and power drills. Objects are captured from multiple views in cluttered scenes.

For the BOP Challenge 2024, we have captured additional testing images for BOP challenge 2024. While the original dataset has 212 objects of 17 categories, we only consider 40 objects of 7 categories, each with high-quality CAD models created by 3D artists.

LM (Linemod)

Hinterstoisser et al.: Model based training, detection and pose estimation of texture-less 3d objects in heavily cluttered scenes, ACCV 2012, project website, license: CC BY 4.0.

15 texture-less household objects with discriminative color, shape and size. Each object is associated with a test image set showing one annotated object instance with significant clutter but only mild occlusion.

LM-O (Linemod-Occluded)

Brachmann et al.: Learning 6d object pose estimation using 3d object coordinates, ECCV 2014, project website, license: CC BY-SA 4.0.

Provides additional ground-truth annotations for all modeled objects in one of the test sets from LM. This introduces challenging test cases with various levels of occlusion. Note the PBR-BlenderProc4BOP training images are the same as for LM.

YCB-V (YCB-Video)

Xiang et al.: PoseCNN: A Convolutional Neural Network for 6D Object Pose Estimation in Cluttered Scenes, RSS 2018, project website, license: MIT.

21 YCB objects captured in 92 videos. Compared to the original YCB-Video dataset, the differences in the BOP version are:

- Only on a subset of test images is used for the evaluation - a subset of 75 images was manually selected for each of the 12 test scenes to remove redundancies and to avoid images with erroneous ground-truth poses. The list of selected images is in the file test_targets_bop19.json in the base archive. The selected images are a subset of images listed in "YCB_Video_Dataset/image_sets/keyframe.txt" in the original dataset.

- The 3D models were converted from meters to millimeters and the centers of their 3D bounding boxes were aligned with the origin of the model coordinate system. This transformation was reflected also in the ground-truth poses. The models were transformed so they follow the same conventions as models from other datasets included in BOP and are thus compatible with the BOP toolkit.

- We additionally provide 50K PBR training images that were generated for the BOP Challenge 2020.

The 80K synthetic training images included in the original version of the dataset are also provided.

We split the real training images into two parts ycbv_train_real.zip (Part 1), ycbv_train_real.z01 (Part 2). Once downloaded, these files can be unzipped directly using “7z x ycbv_train_real.zip” or re-merged together using“zip -s0 ycbv_train_real.zip --out ycbv_train_real_all.zip”.

RU-APC (Rutgers APC)

Rennie et al.: A dataset for improved RGBD-based object detection and pose estimation for warehouse pick-and-place, Robotics and Automation Letters 2016, project website.

14 textured products from the Amazon Picking Challenge 2015 [6], each associated with test images of a cluttered warehouse shelf.

T-LESS

Hodan et al.: T-LESS: An RGB-D Dataset for 6D Pose Estimation of Texture-less Objects, WACV 2017, project website, license: CC BY 4.0.

30 industry-relevant objects with no significant texture or discriminative color. The objects exhibit symmetries and mutual similarities in shape and/or size, and a few objects are a composition of other objects. Test images originate from 20 scenes with varying complexity. Only images from Primesense Carmine 1.09 are included in the archives below. Images from Microsoft Kinect 2 and Canon IXUS 950 IS are available at the project website. However, only the Primesense images can be used in the BOP Challenge 2019/2020.

ITODD (MVTec ITODD)

Drost et al.: Introducing MVTec ITODD - A Dataset for 3D Object Recognition in Industry, ICCVW 2017, project website, license: CC BY-NC-SA 4.0.

28 objects captured in realistic industrial setups with a high-quality Gray-D sensor. The ground-truth 6D poses are publicly available only for the validation images, not for the test images.

HB (HomebrewedDB)

Kaskman et al.: HomebrewedDB: RGB-D Dataset for 6D Pose Estimation of 3D Objects, ICCVW 2019, project website, license: CC0 1.0 Universal.

33 objects (17 toy, 8 household and 8 industry-relevant objects) captured in 13 scenes with varying complexity. The ground-truth 6D poses are publicly available only for the validation images, not for the test images. The dataset includes images from Primesense Carmine 1.09 and Microsoft Kinect 2. Note that only the Primesense images can be used in the BOP Challenge 2019/2020.

IC-BIN (Doumanoglou et al.)

Doumanoglou et al.: Recovering 6D Object Pose and Predicting Next-Best-View in the Crowd, CVPR 2016, project website.

Test images of two objects from IC-MI, which appear in multiple locations with heavy occlusion in a bin-picking scenario.

IC-MI (Tejani et al.)

Tejani et al.: Latent-class hough forests for 3D object detection and pose estimation, ECCV 2014, project website.

Two texture-less and four textured household objects. The test images show multiple object instances with clutter and slight occlusion.

TUD-L (TUD Light)

Hodan, Michel et al.: BOP: Benchmark for 6D Object Pose Estimation, ECCV 2018, license: CC BY-SA 4.0.

Training and test image sequences show three moving objects under eight lighting conditions.

TYO-L (Toyota Light)

Hodan, Michel et al.: BOP: Benchmark for 6D Object Pose Estimation, ECCV 2018, license: CC BY-NC 4.0.

21 objects, each captured in multiple poses on a table-top setup, with four different table cloths and five different lighting conditions.

The thumbnails of the datasets were obtained by rendering colored 3D object models in the ground truth 6D poses over darkened test images.