BOP Challenge 2025

Theme: Model-based and model-free 2D/6D object detection on BOP-Classic, BOP-H3 and new BOP-Industrial datasets (XYZ-IBD, ITODD-MV, IPD).

- 23/Nov/2025 - The recordings of the ICCV'25 R6D Workshop with BOP winner presentation and result analysis are online.

- 16/Nov/2025 - The BOP Challenge 2025 Awards are online.

- 24/Aug/2025 - The default 2D detections for Track 6 (model-free 6D detection on BOP-H3) are available at BOP HuggingFace Hub.

- 19/Aug/2025 - All object-onboarding sequences for model-free tasks (including new sequences for HOT3D and HANDAL) are now available (search for "onboarding" links).

- 21/Jun/2025 - The BOP 2025 early-bird awards have been announced.

- 12/Jun/2025 - The first Perception Challenge for Bin-picking (BPC) has announced its winners, find out more in this video.

- 15/May/2025 - The ICCV 2025 Workshop on "Recovering 6D Object Pose" will take place in Honolulu, Hawai'i. .

- 3/Apr/2025 - Q&A session on OpenCV YouTube channel about BOP Challenge 2025 and the associated OpenCV Bin-Picking Challenge.

- 7/Mar/2025 - The BOP Challenge 2025 has been announced!

| BOP Challenge 2025 is associated with the OpenCV Perception Challenge for Bin-picking (BPC), which offers $60,000 in prizes and is co-organized by OpenCV, Intrinsic, BOP, Orbbec, and University of Hawaii at Manoa. Phase 1 of the OpenCV challenge is evaluated on the IPD dataset which is also included in BOP Challenge 2025 – participants can evaluate the pose accuracy in BOP before submitting a full docker image to BPC. |

1. Introduction

BOP 2017-2024: To measure the progress in 6D object pose estimation and related tasks, we created the BOP benchmark in 2017 and have been organizing challenges on the benchmark datasets together with the R6D workshops since then. The field has come a long way, with the accuracy in model-based 6D localization of seen objects improving by more than 50% (from 56.9 to 86.0 AR) on the seven BOP core datasets. Since 2023 we evaluate a more practical yet more challenging task of model-based 6D localization of unseen objects, where new objects need to be onboarded just from their CAD models in max 5 min on 1 GPU. The best method for this task achieved in 2024 an impressive 82.5 AR. In 2024, we further defined a new 6D object detection task, model-free variant of all tasks, and introduced BOP-H3 datasets focused on AR/VR scenarios.

New in BOP 2025: We are introducing the BOP-Industrial datasets and the multi-view problem setup. See below for details.

1.1 New datasets

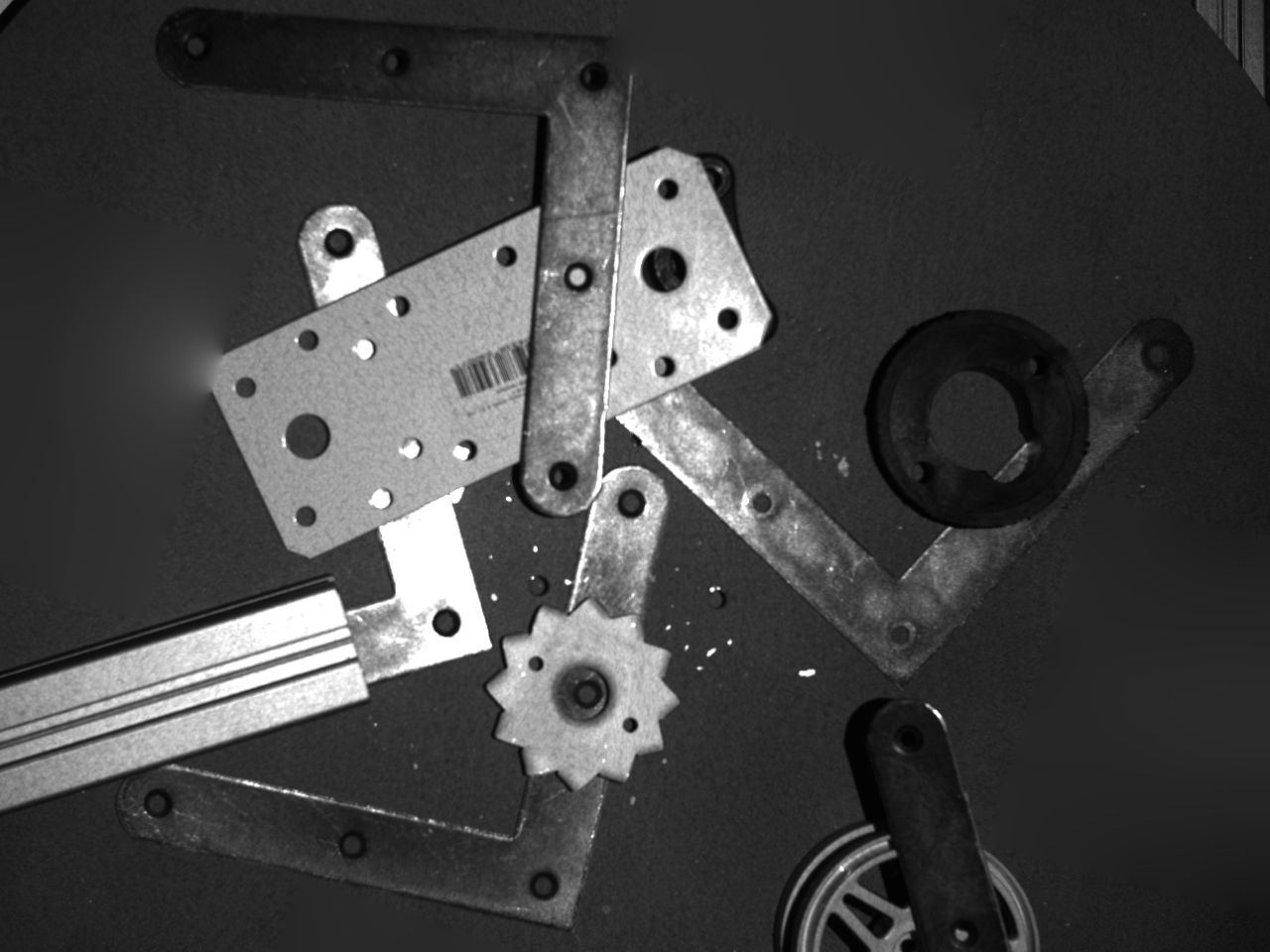

In 2025, we are introducing a new BOP-Industrial group of datasets that are specifically focused on robotic bin picking. BOP-Industrial includes three datasets – XYZ-IBD from XYZ Robotics, ITODD from MVTec, IPD from Intrinsic – showing cluttered scenes with industrial objects that are recorded with different industrial sensors (grayscale, multi-view, structured-light depth).

1.2 New multi-view problem setup

Industrial settings often rely on multiple top-down views. To address this, we provide multi-view images in all BOP-Industrial datasets that enables a direct comparison of single- and multi-view approaches. By leveraging multi-view input images, participants can refine pose estimates and resolve pose ambiguities inherent in single-view approaches.

2. Important dates

Deadlines for submitting results:

- Challenge Start: Feb 1, 2025 (11:59PM UTC) - BOP Challenge 2025 opened.

- Early-bird deadline: June 1, 2025 (11:59PM UTC) - Results will be presented on Jun 11/12, 2025 at the CVPR Workshop on "Perception for Industrial Robotics Automation" in Nashville, Tennessee.

- Main deadline: October 1, 2025 (11:59PM UTC) - Results will be presented on Oct 19/20, 2025 at the ICCV Workshop on "Recovering 6D Object Pose" in Honolulu, Hawai'i.

3. Challenge tracks

Challenge tracks on BOP-Classic-Core datasets:

- Track 1: Model-based 6D localization of unseen objects on BOP-Classic-Core

- Track 2: Model-based 6D detection of unseen objects on BOP-Classic-Core

- Track 3: Model-based 2D detection of unseen objects on BOP-Classic-Core

Challenge tracks on BOP-H3 datasets:

- Track 4: Model-based 6D detection of unseen objects on BOP-H3

- Track 5: Model-based 2D detection of unseen objects on BOP-H3

- Track 6: Model-free 6D detection of unseen objects on BOP-H3

- Track 7: Model-free 2D detection of unseen objects on BOP-H3

Challenge tracks on BOP-Industrial datasets (single- and multi-view):

- Track 8: Model-based 6D detection of seen objects on BOP-Industrial

- Track 9: Model-based 2D detection of seen objects on BOP-Industrial

- Track 10: Model-based 6D detection of unseen objects on BOP-Industrial

- Track 11: Model-based 2D detection of unseen objects on BOP-Industrial

Default 2D detections/segmentations

As most recent methods for 6D object localization and detection start with a 2D detection stage, participants in the 6D localization and detection tasks are also encouraged to evaluate their methods using default 2D detections/segmentations (results of CNOS, the best 2D detection method for model-based 2D detection of unseen objects in 2023). Starting from the same 2D detections enables direct comparison of the object pose estimation stages.

- Default model-based 2D detections/segmentations for Track 1 and 2

- Default model-based 2D detections/segmentations for Track 4

- Default model-free 2D detections/segmentations for Track 6

- Default model-based 2D detections/segmentations for Track 8

- Default model-based 2D detections/segmentations for Track 10

4. Awards

Awards for all tracks:

- The Overall Best Method – The top method (achieving the highest accuracy).

- The Best Open-Source Method – The top method whose source code is publicly available.

Extra awards for tracks on 6D detection and localization:

- The Best Method Using Default Detections/Segmentations – The top method that relies on provided default detections/segmentations.

- The Best Fast Method – The top method with the average running time per image below 1s.

Extra awards for tracks on 6D detection on BOP-Industrial:

- The Best Single-View Method – The top method that relies on a single view (first entry of

im_idsintest_targets_multiview_bop25.json). - The Best Multi-View Method – The top method that relies on multiple views (all entries of

im_idsintest_targets_multiview_bop25.json).

5. How to participate

For track 1 on 6D localization, please follow instructions from BOP Challenge 2019.

For tracks 3, 5, 7, 9, 11 on 2D detection, please follow instructions from BOP Challenge 2022.

For tracks 2, 4, 6, 8, 10 on 6D detection, the difference compared to 6D localization is that no information about the target objects in the test scenes can be used. That means participants can not use information in test_targets_bop19.json (providing identities of object instances to localize), but only the test images and cameras defined in test_targets_multiview_bop25.json.

In the BOP Challenge 2025, we evaluate both single-view and multi-view methods on BOP-Industrial datasets. Authors of multi-view methods can use all views specified in the list im_ids in the test_targets_multiview_bop25.json files. The pose estimates are expected to be defined with respect to the camera associated with the first image listed in im_ids. Single-view methods can only rely on the first image listed in im_ids to detect a 6D pose. Single- and multi-view methods are distinguished when creating a method and will be labeled in the leaderboards.

Results for all tracks should be submitted via this form. The online evaluation system uses the script eval_bop24_pose.py to evaluate 6D localization results, eval_bop24_pose.py to evaluate 6D detection results, and script eval_bop22_coco.py to evaluate 2D detection/segmentation results.

A detailed description of an example baseline that relies on YOLOv11 for object detection and a simple network for pose regression is provided on GitHub.

WARNING: For the 6D detection tasks, unlike in the OpenCV Bin-Picking Challenge, it is only allowed to use the information in test_targets_multiview_bop25.json (IDs and numbers of the visible object instances cannot be used as input).

IMPORTANT: When creating a method, please document your method in the "Description" field using the this template:

*Submitted to:* BOP Challenge 2025 *Training data:* Type of images (e.g. real, provided PBR, custom synthetic, real + provided PBR), etc. *Onboarding data:* Only for Tracks 6 and 7; type of onboarding (static, dynamic, model-based), etc. *Notes:* Values of important method parameters, used 2D detection/segmentation method, details about the object representation built during onboarding, etc.

Terms & conditions

- To be considered for the awards and for inclusion in a publication about the challenge, the authors need to provide their full names and documentation of the method (including specifications of the used computer) through the online submission form. If your method is under review (CVPR, ECCV, etc.) and sharing your names on the BOP web may compromise anonymity, please provide your identity via email to bop.benchmark@gmail.com.

- The winners need to present their methods at the awards reception.

- After the submitted results are evaluated (by the online evaluation system), the authors can decide whether to make the scores visible to the public.

6. Organizers

Martin Sundermeyer, Google

Junwen Huang, TU Munich

Médéric Fourmy, Czech Technical University in Prague

Van Nguyen Nguyen, ENPC ParisTech

Agastya Kalra, Intrinsic

Vahe Taamazyan, Intrinsic

Caryn Tran, Northwestern University

Stephen Tyree, NVIDIA

Anas Gouda, TU Dortmund

Lukas Ranftl, MVTec

Jonathan Tremblay, NVIDIA

Eric Brachmann, Niantic

Bertram Drost, MVTec

Vincent Lepetit, ENPC ParisTech

Carsten Rother, Heidelberg University

Stan Birchfield, NVIDIA

Jiří Matas, Czech Technical University in Prague

Tomáš Hodaň, Reality Labs at Meta