BOP Challenge 2020

- 15/Sep/2020 - An analysis of the BOP Challenge 2020 results is now available in this ECCVW 2020 paper.

- 23/Aug/2020 - The winners of the BOP Challenge 2020 have been announced at the R6D workshop at ECCV 2020!

- 05/Jun/2020 - BOP Challenge 2020 has been opened!

Results of the 2019 and 2020 editions of the challenge are published in:

Tomáš Hodaň,

Martin Sundermeyer,

Bertram Drost,

Yann Labbé,

Eric Brachmann,

Frank Michel,

Carsten Rother,

Jiří Matas,

BOP Challenge 2020 on 6D Object Localization, ECCVW 2020

[PDF,

SLIDES,

BIB]

When referring to the BlenderProc4BOP renderer, please cite:

M. Denninger, M. Sundermeyer, D. Winkelbauer, D. Olefir, T. Hodaň, Y. Zidan, M. Elbadrawy, M. Knauer, H. Katam, A. Lodhi,

BlenderProc: Reducing the Reality Gap with Photorealistic Rendering, RSS Workshops 2020

[PDF,

CODE,

VIDEO,

BIB]

1. Introduction

As the 2019 edition, the 2020 edition of the BOP Challenge focuses on pose estimation of specific rigid objects. The 2019 and 2020 editions follow the same task definition, evaluation methodology, list of core datasets and instructions for participation, which are described on the page about the 2019 edition. This page only describes updates introduced in the 2020 edition. The 2019, 2020 and 2022 editions share the same leaderboard and the submission form for this leaderboard stays open to allow comparison with upcoming methods.

Photorealistic training images: In 2020, the challenge is focused on the synthesis of effective RGB training images. While learning from synthetic data has been common for depth-based pose estimation methods, the same is still difficult for RGB-based methods where the domain gap between synthetic training and real test images is more severe. Specifically for the challenge, we have therefore prepared BlenderProc4BOP, an open-source and light-weight physically-based renderer (PBR), and used it to render training images for each of the seven core datasets. With this addition, we hope to reduce the entry barrier of the challenge for participants working on learning-based RGB and RGB-D solutions. We are excited to see whether photorealistic training images will help to close the performance gap between depth-based and RGB-based methods that we have observed in the 2019 edition of the challenge. Participants are encouraged to build on top of the renderer and release their extensions.

Short papers in the ECCV 2020 workshop proceedings: Participants of the 2020 edition have the opportunity to document their methods by submitting a short paper to the 6th Workshop on Recovering 6D Object Pose (ECCV 2020). The paper will be reviewed by the organizational committee and accepted if it presents a method with competitive results or distinguishing features. The paper must have exactly 4 pages including references and, if accepted, will be published in the ECCV workshop proceedings. Note that besides the short papers, the workshop invites submissions of full papers (14 pages excluding references) on any topic related to 6D object pose estimation.

2. Important dates

- Submission deadline for results:

August 16August 19, 2020 (11:59PM UTC). The submission form stays open even after the deadline. - Submission deadline for short papers describing the methods:

August 16August 19, 2020 (11:59PM UTC). Submit the papers by e-mail to r6d.workshop@gmail.com. - Presentation of awards: August 23, 2020 (at the R6D workshop at ECCV 2020).

3. Photorealistic training images

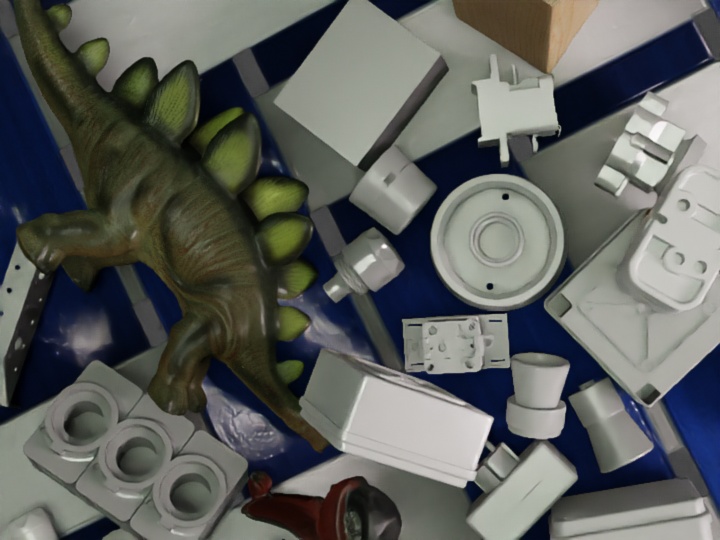

In the 2020 edition, we additionally provide 50K photorealistic training images for each of the seven core datasets: LM/LM-O, T-LESS, TUD-L, IC-BIN, ITODD, HB, YCB-V. The images were rendered with BlenderProc4BOP, an open-source and light-weight physically-based renderer (PBR) prepared for the BOP Challenge 2020. The renderer implements a synthesis approach similar to ObjectSynth. The objects are thrown inside a cube using physics simulations. A rich spectrum of the background is ensured by assigning a random PBR material from the CC0 Textures library to the walls of the cube. Example images are below.

4. Awards

- The Overall Best Method – The top-performing method on the seven core datasets.

- The Best RGB-Only Method – The top-performing RGB-only method on the seven core datasets.

- The Best Fast Method – The top-performing method on the seven core datasets with the average running time per image below 1s.

- The Best BlenderProc4BOP-Trained Method – The top-performing method on the seven core datasets which was trained only with the provided BlenderProc4BOP images.

- The Best Single-Model Method – The top-performing method on the seven core datasets which uses a single machine learning model (typically a neural network) per dataset.

- The Best Open-Source Method – The top-performing method on the seven core datasets whose source code is publicly available.

- The Best Method on Dataset D – The top-performing method on each of the available datasets.

The conditions which a method needs to fulfill in order to qualify for the awards are the same as in BOP Challenge 2019.

5. How to participate

The instructions for participation and the submission form are the same as in BOP Challenge 2019.

6. Organizers

Tomáš Hodaň, Czech Technical University in Prague

Martin Sundermeyer, DLR German Aerospace Center

Eric Brachmann, Heidelberg University

Bertram Drost, MVTec

Frank Michel, Technical University Dresden

Jiří Matas, Czech Technical University in Prague

Carsten Rother, Heidelberg University